JamComments Now Offers AI-Powered Moderation

You can now use an LLM to moderate new comments submitted through JamComments. Here's the why and how.While AI has absolutely flooded the digital product space for the past ~year, I've been relatively hesitant about its role within digital products. LLMs in particular are incredibly useful (I use them almost every day), but there've been a lot of relatively uninspiring product applications and so much hype. Frankly, many of the product using AI as their flagship feature solve a pain I have to be convinced I'm suffering from. They're cool, but a hard sell.

For that reason, I've been resistant to using it within my own products. I didn't want to bolt on an AI-powered feature for the sake of flashy marketability. If was going to use it, it'd better be legitimately helpful.

Well, I think I've actually found one (technically, credit goes to Cameron Pak for suggesting it).

Moderation is Hard

Anytime you're moderating user-generated content, you're in for some level of hassle. That's just part of the package when you need to balancing the free promotion of ideas with the appropriateness of the resulting content in a given context. If you err on the side of thorough moderation, you need to exert a lot of energy to do it well, and you risk stifling expression. Other other hand, taking a more laissez-faire approach carries a whole set of different risks.

In my experience with them, LLMs are pretty good at discerning linguistic expressions according to a set of principles you define. And especially with some guardrails in place, I really do think it can be a reliable moderator for this sort of purpose.

That's why I've rolled out AI-powered moderation for JamComments. You define the principles it to be used when moderating a comment, and the machine will handle it from there. You no longer need to choose between auto-approving every new comment, or manually moderating each one yourself.

Using AI Moderation

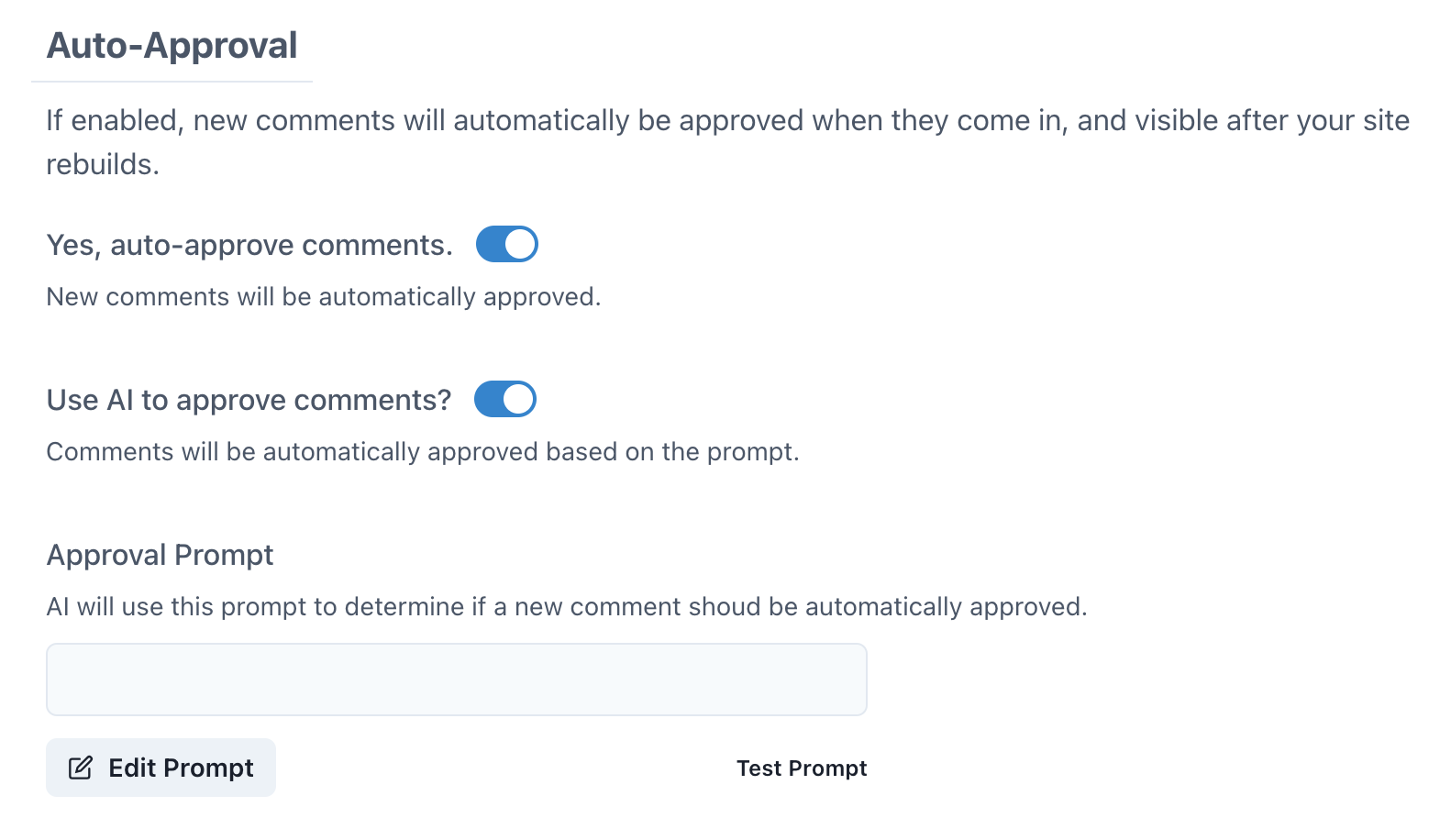

There really isn't anything revolutionary going on here. After signing up for a paid account, all you need to do is enable AI-moderation on your site's settings page:

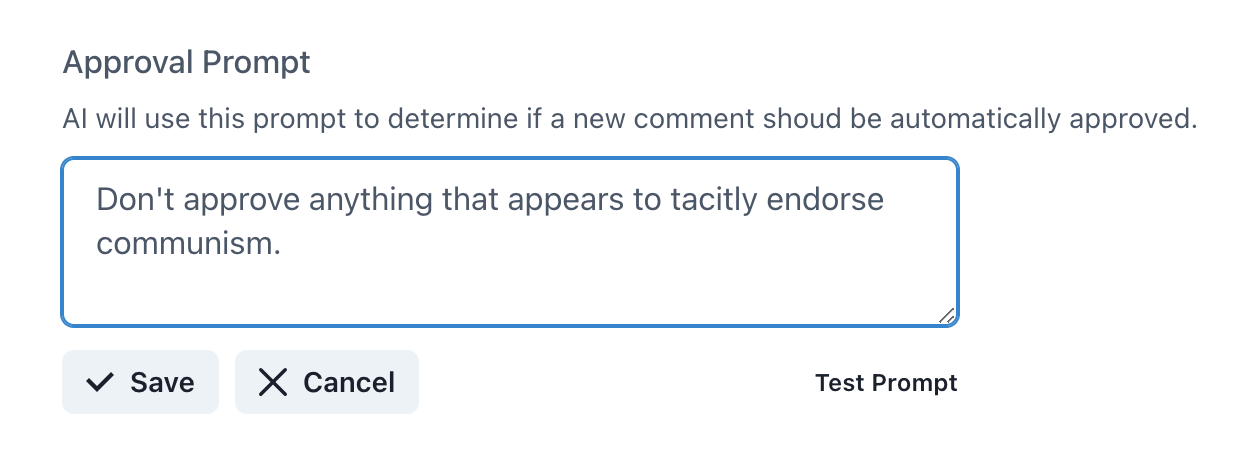

After that, define a prompt the LLM will use to assess a comment.

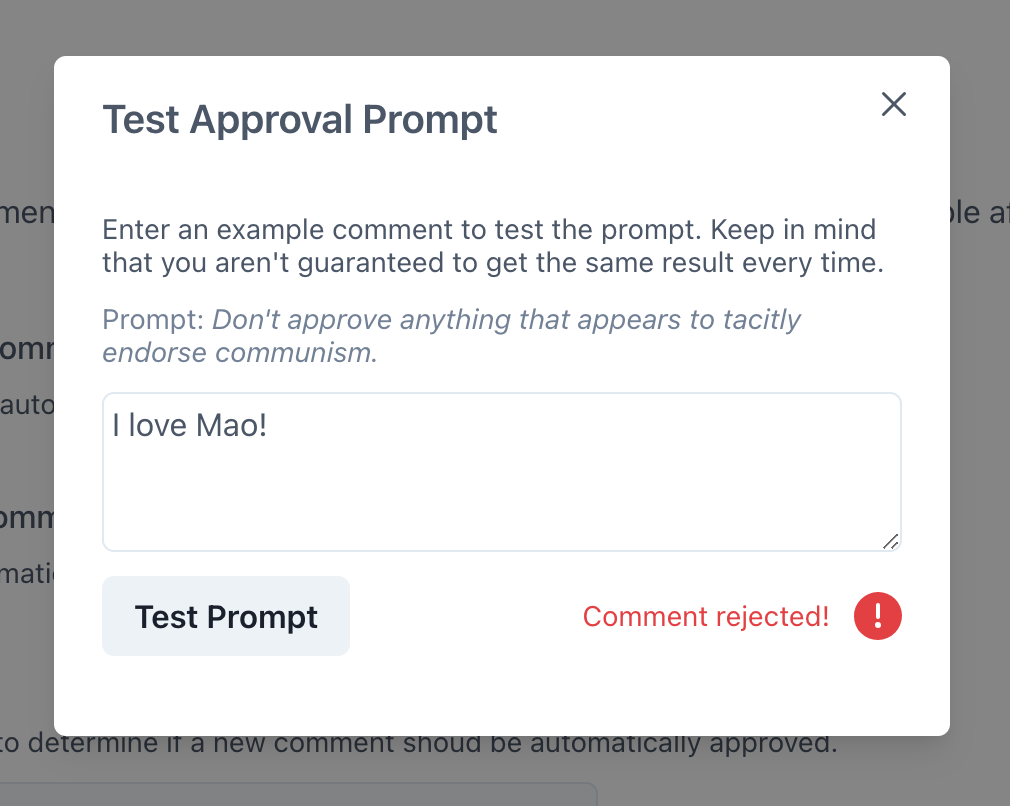

AI-powered anything won't ever be deterministic, so you're welcome to test it on a few comments yourself before sticking with it:

The feature is powered by Anthropic's Claude, and is integrated with a healthy number of boundaries to prevent any sort of abuse or injection attacks.

Possible Use Cases

Ever since Camron first suggested the idea, my brain started stewing on the potential applications for different types of content. Here are some:

For your blog, you might want to avoid approving comments that contain any foul language. Possible prompt:

Do not approve any comments that contain foul language or cursing according to the language used by the typical English-speaking American. Some expressions are OK (like "heck" or "darn it"), but nothing beyond that level of intensity.

Or maybe you run a faith-based digital magazine that allows reader-submitted comments and you want to make sure no inappropriate content is submitted:

You're moderating comments made on articles for an online, faith-based magazine. Only approve comments that are neutral or uplifting in their spirit, and that do not disparage a person or group of people. Do not allow anything that encourages people to live against basic Christian principles.

For one more example, perhaps you run an e-commerce store and you'd like to keep reviews civil.

The comment you are moderating is a product review. Do not allow the review if the person says they did not purchase the product. Do not allow cussing. Please DO approve comments that offer serious criticism in a mature way.

You get the idea.

Give It a Shot

I realize that JamComments is in a rather interesting position because it's centered around written content – right up the alley of LLMs. So, I'm eager to see how this feature is leveraged, as well as if other opportunities arise for using it further. So, please — give it a shot and send me your feedback!