On Building Structured Data with Client-Side JavaScript

Thinking through some of the trade-offs and benefits in using client-side JavaScript to generate structured data.Web crawlers and client-side rendered applications (SPAs) have had a weird relationship for a while now. Google has long said they’re able to crawl content built with JavaScript, but it’s still been generally recommended that you server-render that content for maximum SEO benefit.

That’s always made sense. It’s more laborious for bots to crawl JS-rendered content, and as such, it can take longer to index. Plus, not all search engines say they crawl it in the same way Google does. Server-rendering's a safer bet.

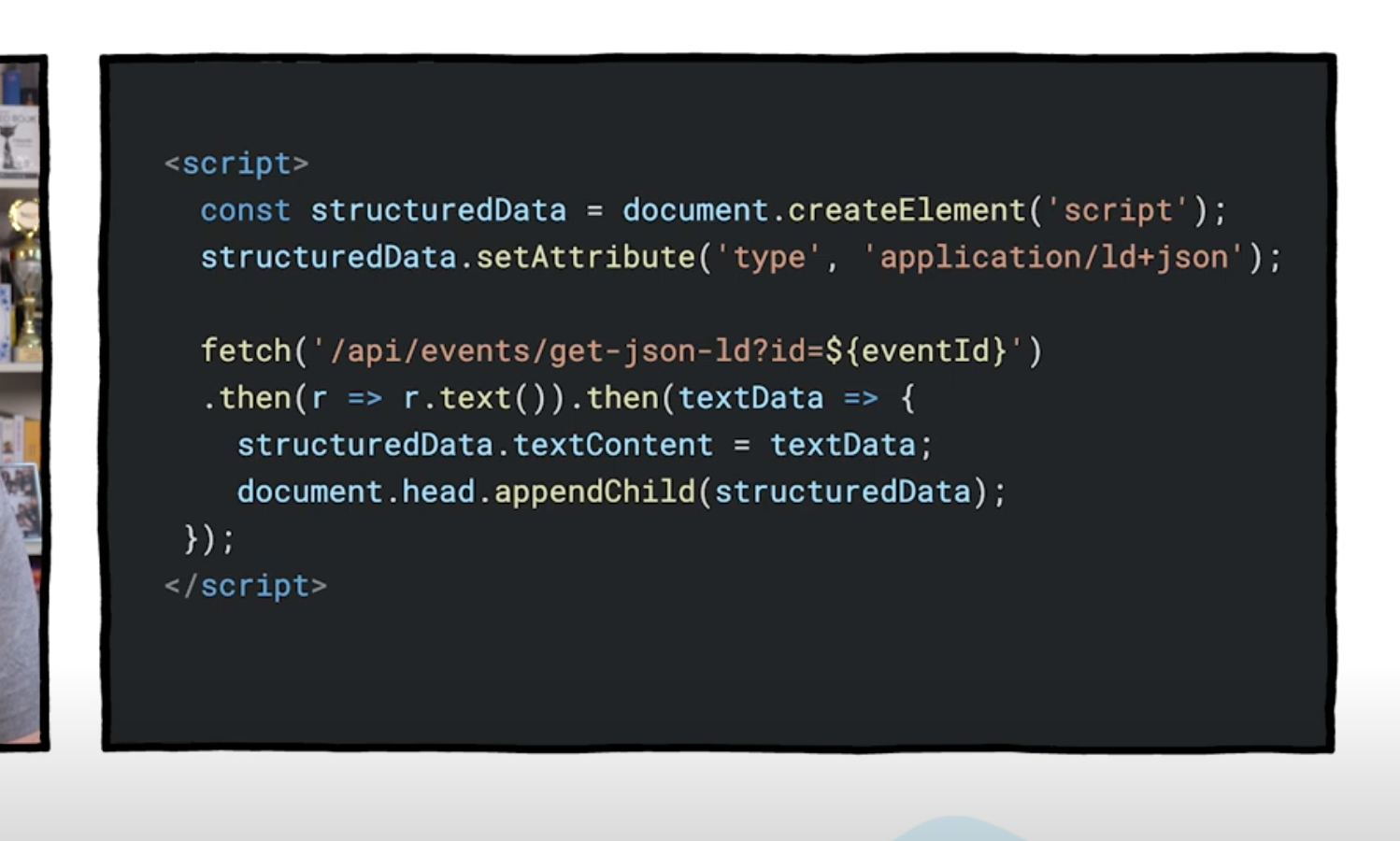

With that in mind, I found it interesting to see Google be even more insistent about their ability to parse structured data built with client-side JavaScript. They even have multiple resources on doing it too – like this page and this video with some pretty clear code snippets on the practice:

I stumbled across all of this while building automated structured data support into JamComments, and knew it'd inform the approach I took in designing how it worked. So, I had a vested interested in thinking about this a bit more.

Who would care about this?

A server-rendered approach is the best way to construct any of your content (including structured data), but I can see the usefulness in being able to inject it on the client. A few types of cases come to mind, including one I've experienced personally.

First, love it or hate it, SPAs have become a normal part of the web. Many of them are both highly interactive SPAs and content-heavy, with very good reason to want that content indexed. Twitter/X is a great example. You won't find the text of a tweet/post in the raw HTML source, but it'll still show up in search results. Being able to leverage structured data without a fundamental change in their rendering strategy is a no-brainer for these sites, especially when you see the numbers behind rich snippets' effectiveness.

Second, the markup generated by content management systems (especially large ones with several parties editing manage content at once) can be rigid, difficult to customize, and slow to iterate on. This has incentivized creators to use tools like Google Tag Manager to inject or manipulate page content on their own terms (and caused many technical SEO specialists' stress levels to spike). Despite the problems it often causes, it's hard to turn down that level of agency when it's right there in front of you.

Finally, there's a (maybe smaller) group out there who'd like to augment existing structured data with supplementary user-generated. But they either can't or prefer not to take over managing it completely. It's just easier & simpler to swoop in via client-side JavaScript. This is exactly where JamComments landed. When comments are left on a blog post, I wanted an out-of-the-box, non-invasive way to enhance the structured data already on the page. (Although, with a bit more configuration, you can have it all server-rendered too.)

Are the drawbacks worth it?

You guessed it: "it depends." Two main considerations were swirling in my head while thinking through this. Fortunately, neither of them are deal-breakers.

Slow Indexing

To start, human-readable content constructed by JavaScript is slower to parse & index, and I'm assuming processing structured data faces similar challenges. It's not something Google would like to admit, but you see a good deal of that type of feedback scattered around the internet. This example, written just a couple of years ago, saw indexing take nearly nine times longer for JavaScript-rendered content than server-rendered. That's not trivial.

Of course, two years is a big amount of internet time, and Google definitely has interest in speeding that problem up, so it's possible things are significantly quicker now. And on top of that, processing structured-data is technically a different game with potentially different protocols. It's certainly something to keep in mind, but in my opinion, not a reason to avoid it altogether.

Search Engine Crawler Support

A common objection to client-side rendered content has been the belief that Google's the only player that can do it, and so you're leaving a lot of SEO chips on the table by neglecting other engines. But that's certainly changed over the years.

Bing states they can do it as well (although, it does recommend a dynamic approach based on user agent), and a number of other engines source their results from Bing. All that aside, Google itself still enjoys over 91% of the market share, so you wouldn't be missing out on much anyway. And again, I can only see this concern becoming less real as time marches forward.

Like I said, no deal-breakers here. Just good ol' tension to manage.

So, now what?

You might remember the battles we had years ago over whether a website must work without JavaScript. In my opinion, proponents of progressive enhancement got overly idealistic, relegating sites that required JavaScript to having committed some low-level crime against humanity.

I think we've touched some grass since then, realizing that expecting users to enable JavaScript on the web really isn't that much of an ask, and we're probably better off building websites assuming they always do. On Syntax, Wes Bos & Scott Tolinski recently touched on the idea of navigating the web without JavaScript enabled. I think they're right. A bit from that episode:

I understand the whole idealistic concept behind it, and I realized we're probably loading a lot of JavaScript on the client, but, really, no one's doing that... People probably gonna get mad at me for that, but I don't I don't buy it. It's it's not gonna happen. - Scott Tolinski

That's how I'm coming to think of SEO and client-side rendering – including structured data generation. Yes, server-rendering everything is probably still the best SEO play. But you're not sinning if you don't, and you're not completely opting out of the SEO game either. Some zealot is probably gonna be torqued by your decision, but hopefully any drama that ensues will just fuel an insane amount of traffic to your site.

As with most decisions like this, the key thing is to use your brain. Consider your constraints & trade-offs, and make a decision. You'll be fine either way.